The main difference between Python decimal and float is, the float is an Approximate number data type and the Decimal is a Fixed-Precision data type.

The float gives you an approximation of the number you declare and decimals represented exactly with precision and scale, it doesn’t round up the value.

Python decimal vs float code

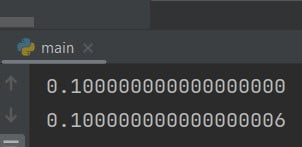

Simple example code. If we print 0.1 with float() and decimal() with 18 decimals places then both of the give different values.

from decimal import Decimal

# Decimals

print(f"{Decimal('0.1'):.18f}")

# Float

print(f"{0.1:.18f}")

Output:

When you simply add 0.3+0.3+0.3 you would expect that it should be equal 0.9 but it is not true.

from decimal import Decimal

# Decimals

print(Decimal('.1') + Decimal('.1') + Decimal('.1'))

# Float

print(.1 + .1 + .1)

Output:

0.3

0.30000000000000004

Python decimal vs float runtime

Using Python 2.7 on my system, the decimal module is ~180x slower. Using Python 3.5, the decimal module is in only ~2.5x slower.

If you care about decimal performance, Python 3 is much faster.

Source: https://stackoverflow.com/questions/41453307/decimal-python-vs-float-runtime

Do comment if you have any doubts or suggestions on this Python comparison topic.

Note: IDE: PyCharm 2021.3.3 (Community Edition)

Windows 10

Python 3.10.1

All Python Examples are in Python 3, so Maybe its different from python 2 or upgraded versions.